The bitdrift ring buffer: time travel, science fiction no more

Dive deep into the special sauce that underpins the Capture SDK: the ring buffer. This post covers the gory technical details.

The core of Capture is what we like to call the ring buffer. This post dives deep into the technical design and implementation of the ring buffer. If the reader is unfamiliar with Capture we recommend first reading our SDK overview blog post. From this point forward it’s going to get nerdy so grab a beverage of choice and get ready to dive in!

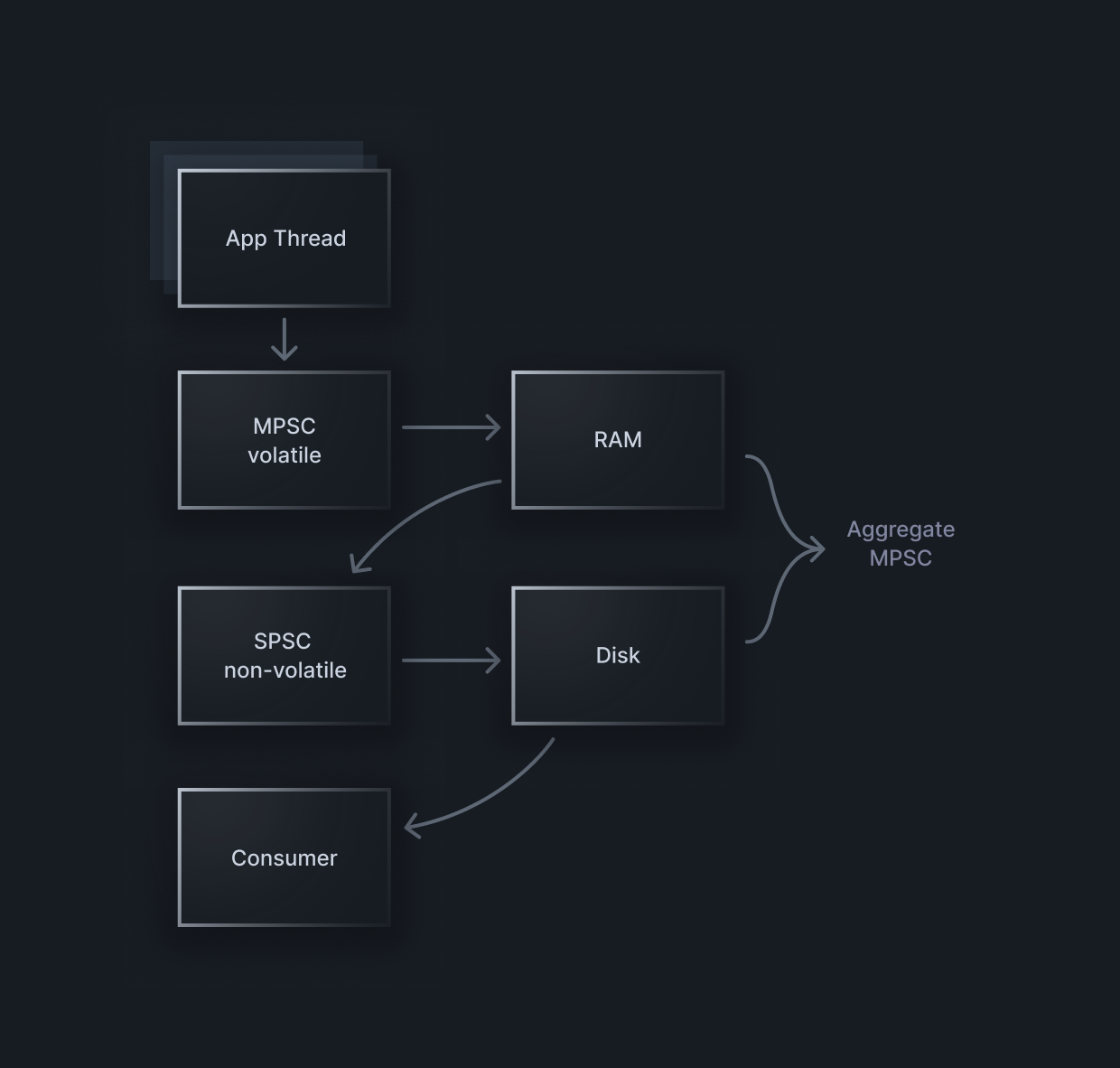

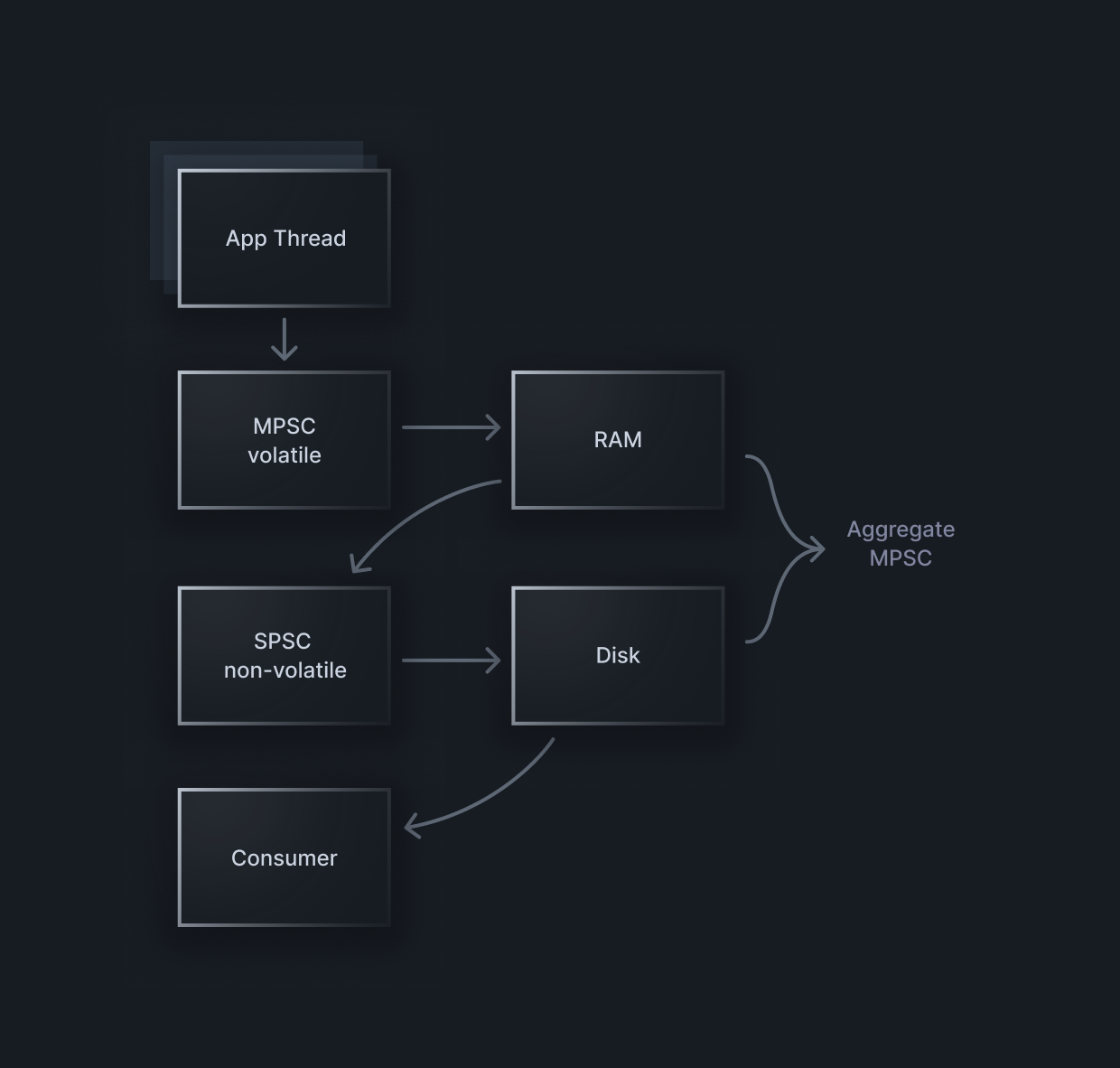

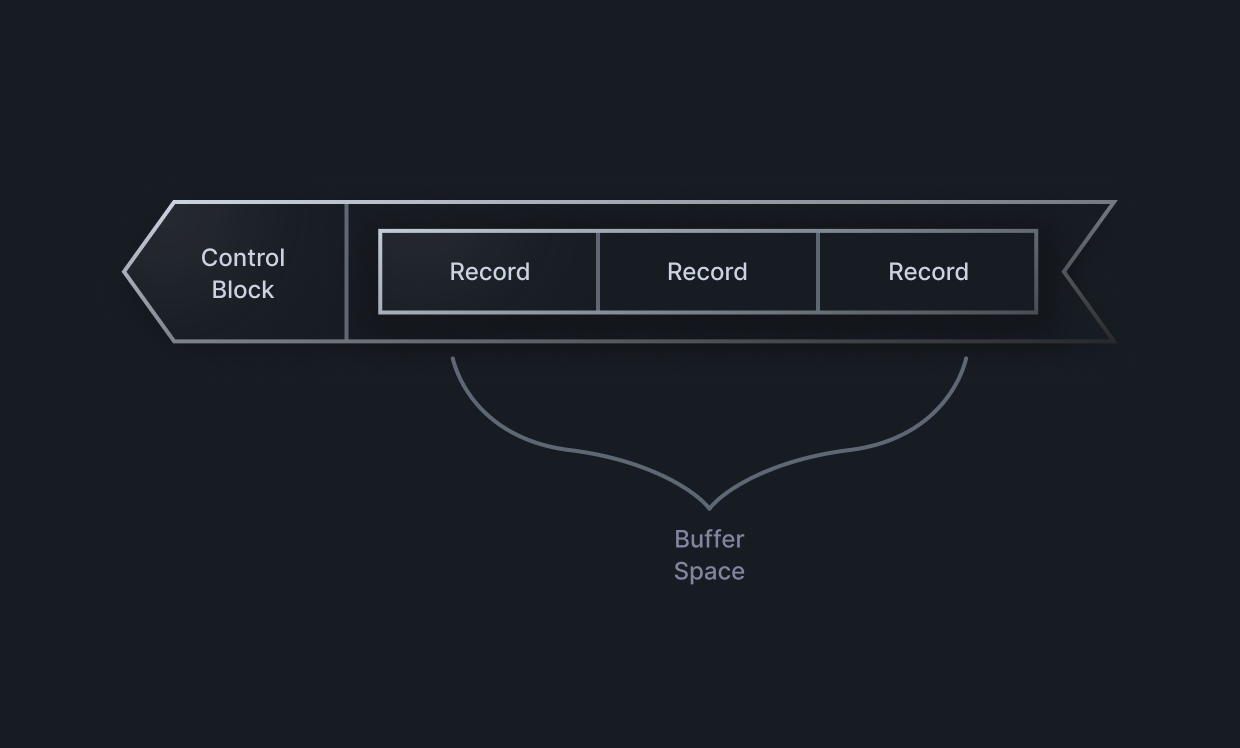

The above diagram shows the major components of the ring buffer:

The above diagram shows the major components of the ring buffer:

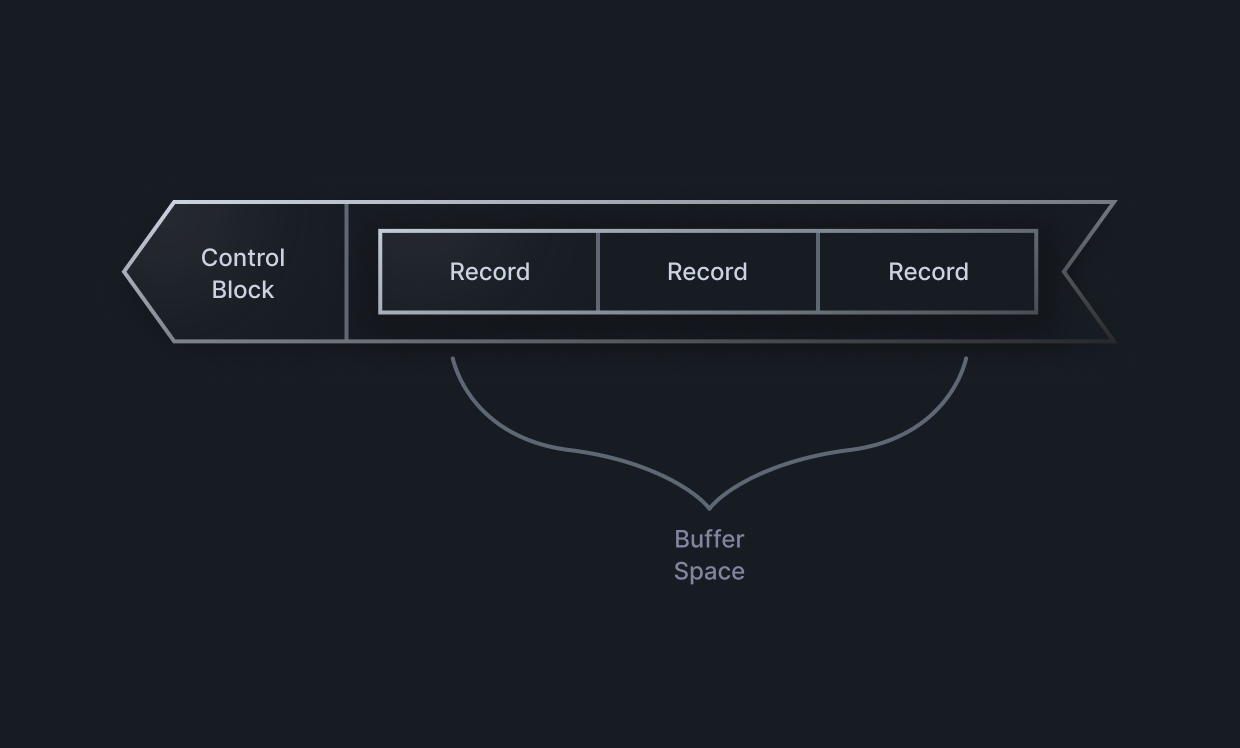

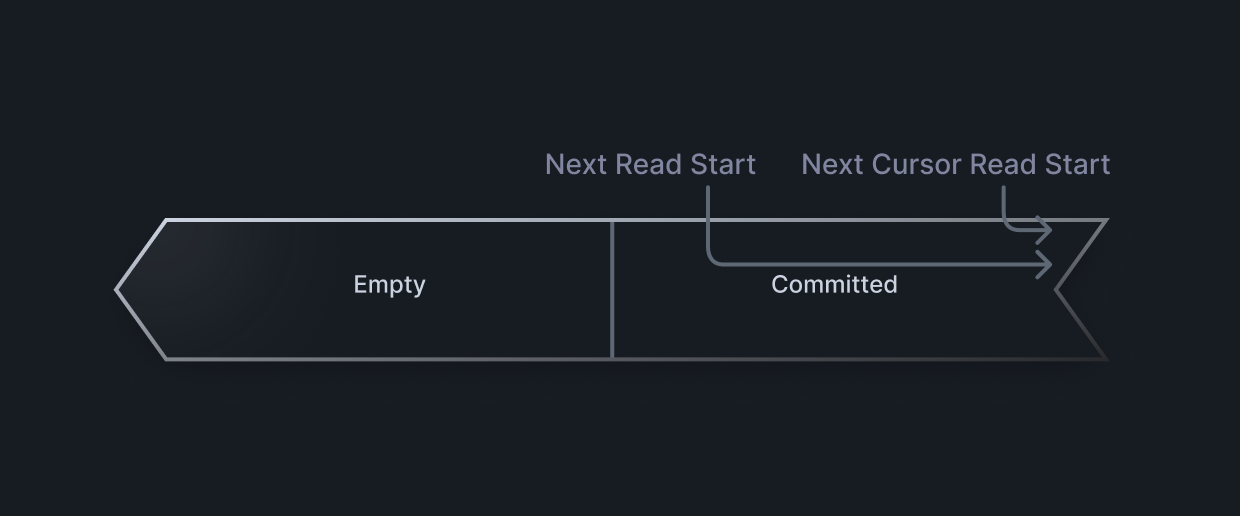

The above diagram shows the general layout of the ring buffer. At the head of the buffer is a small control block that includes various indexes into the buffer needed for normal operation. For example: next write start, committed write start, next read start, next cursor read start, etc.

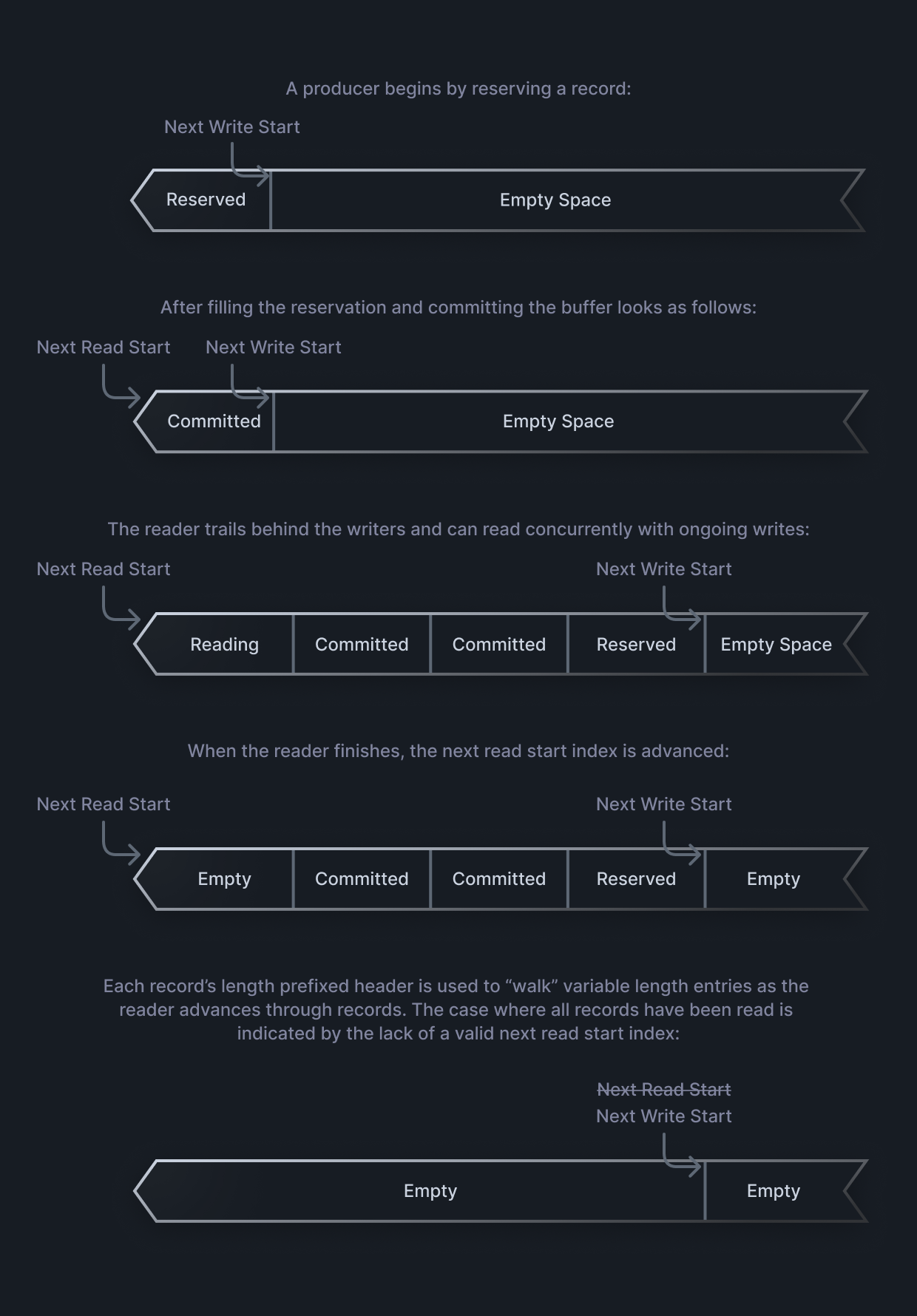

Space for an individual record is reserved by a producer. After data is filled into the record it is committed. At the point that it is committed, the record can be seen by a consumer. The API looks something like:

The above diagram shows the general layout of the ring buffer. At the head of the buffer is a small control block that includes various indexes into the buffer needed for normal operation. For example: next write start, committed write start, next read start, next cursor read start, etc.

Space for an individual record is reserved by a producer. After data is filled into the record it is committed. At the point that it is committed, the record can be seen by a consumer. The API looks something like:

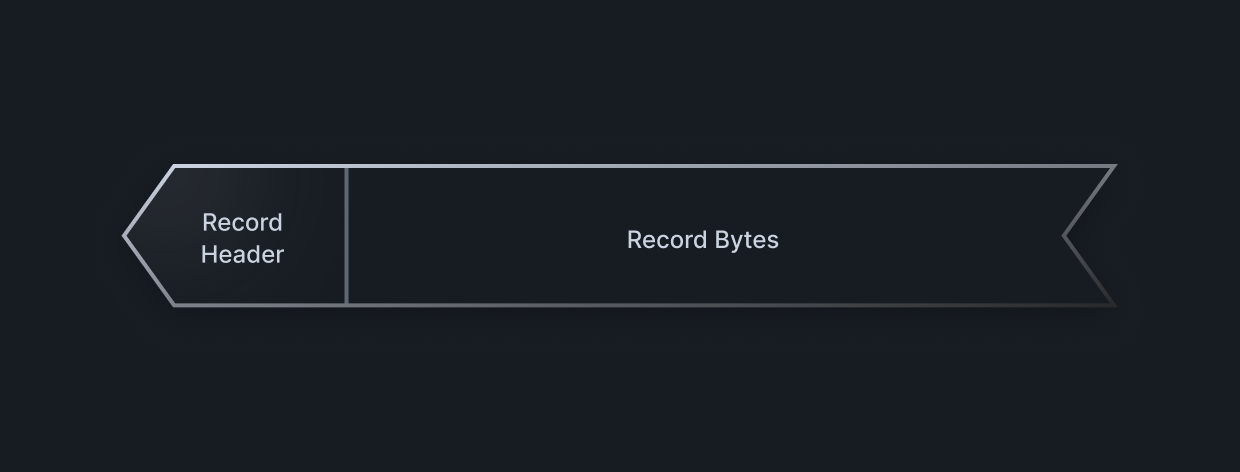

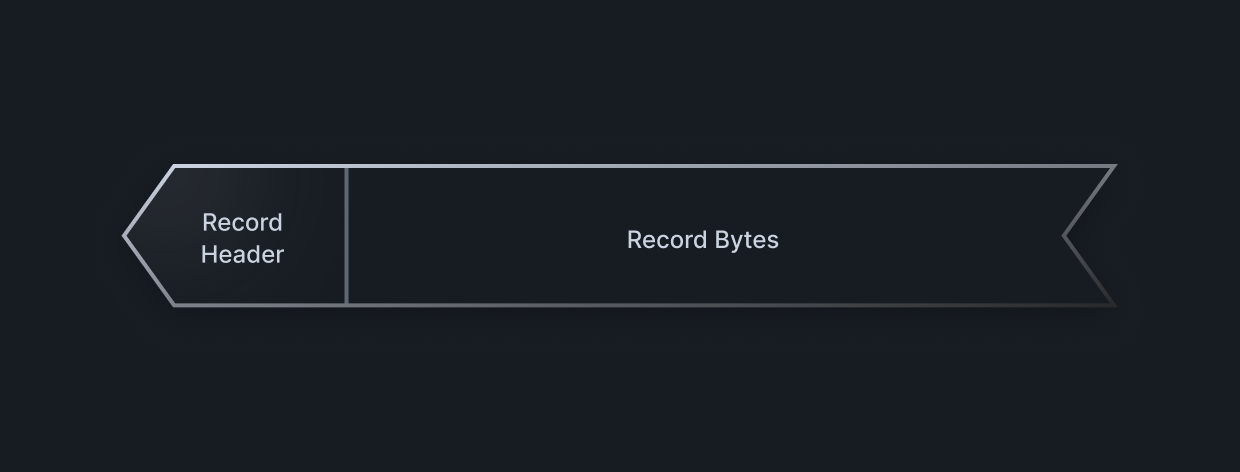

The above diagram shows the format of an individual record. Each record is prefixed with a fixed size header that includes the record size and extra space for optional data (more on this below). The header is followed by the record bytes which may be variable in size. The only limitation is that the record (including its header) must be smaller than the total size of the ring buffer.

The above diagram shows the format of an individual record. Each record is prefixed with a fixed size header that includes the record size and extra space for optional data (more on this below). The header is followed by the record bytes which may be variable in size. The only limitation is that the record (including its header) must be smaller than the total size of the ring buffer.

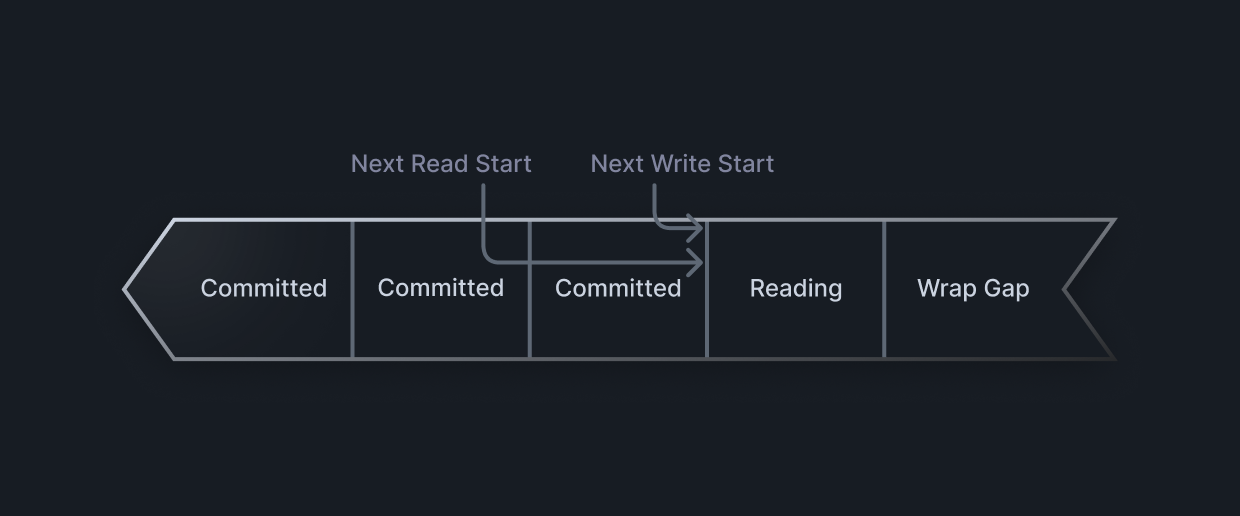

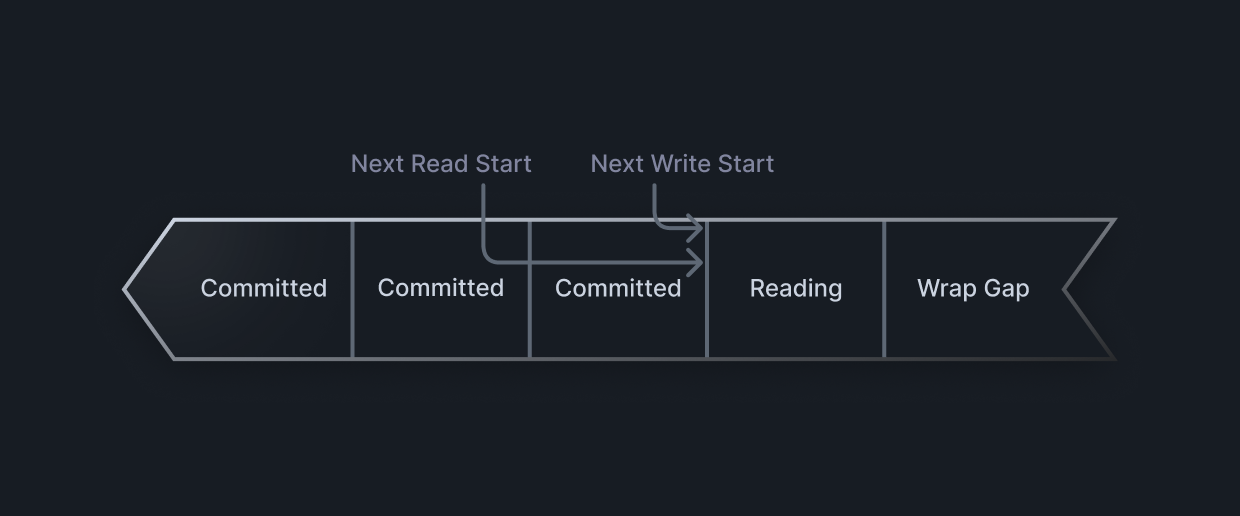

In the above case, the buffer is full (next write start is the same index as next read start). When the reader is done reading its current record, it needs to take into account the “wrap gap” so that it can move on to reading the next record at the beginning of the buffer. Thus, the existence (or not) of the wrap gap is explicitly tracked in the buffer control header.

In the above case, the buffer is full (next write start is the same index as next read start). When the reader is done reading its current record, it needs to take into account the “wrap gap” so that it can move on to reading the next record at the beginning of the buffer. Thus, the existence (or not) of the wrap gap is explicitly tracked in the buffer control header.

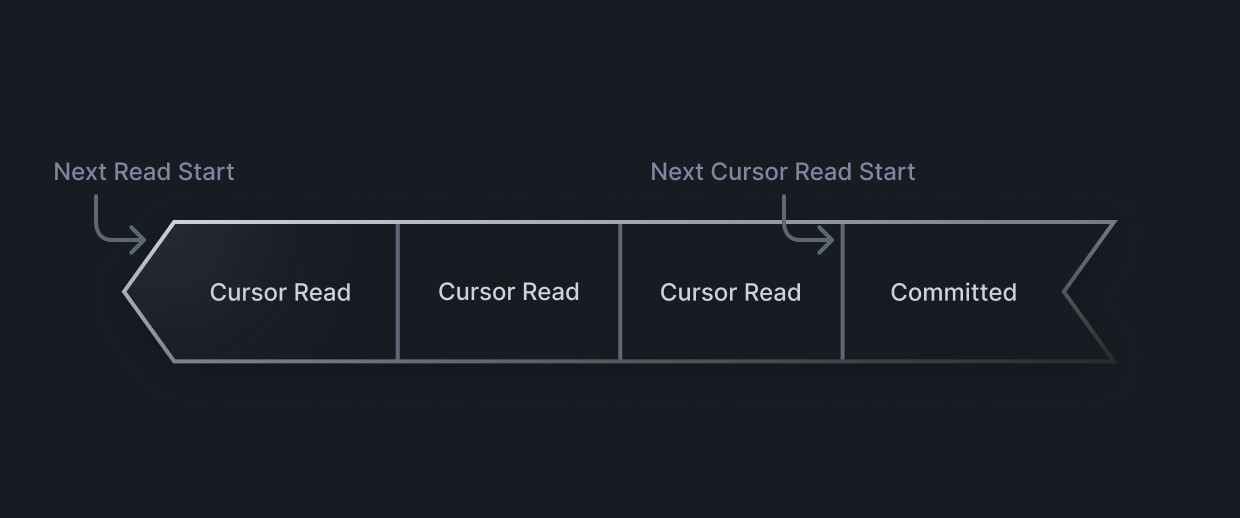

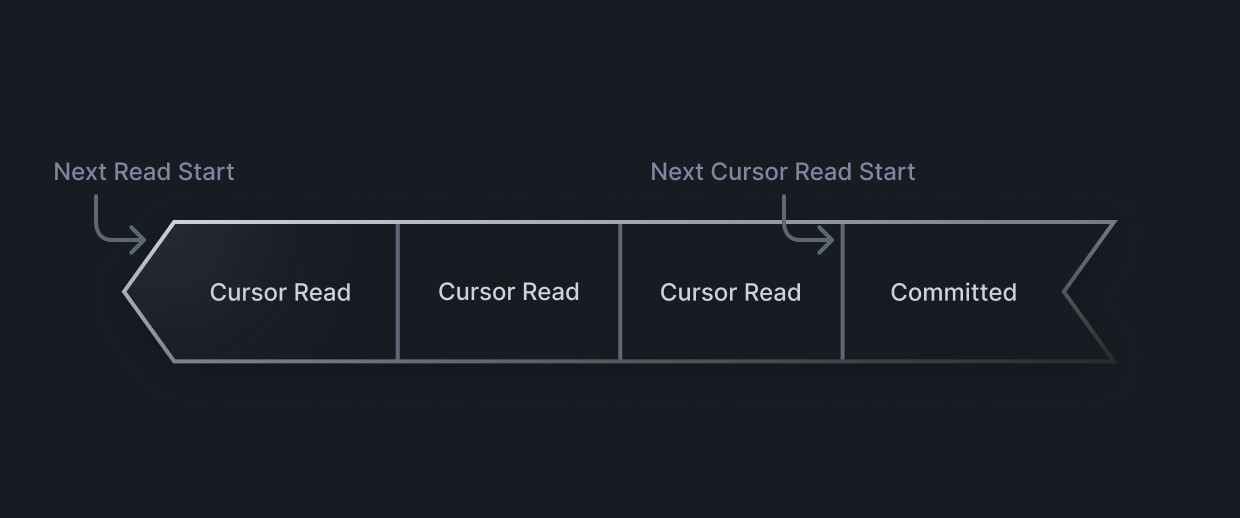

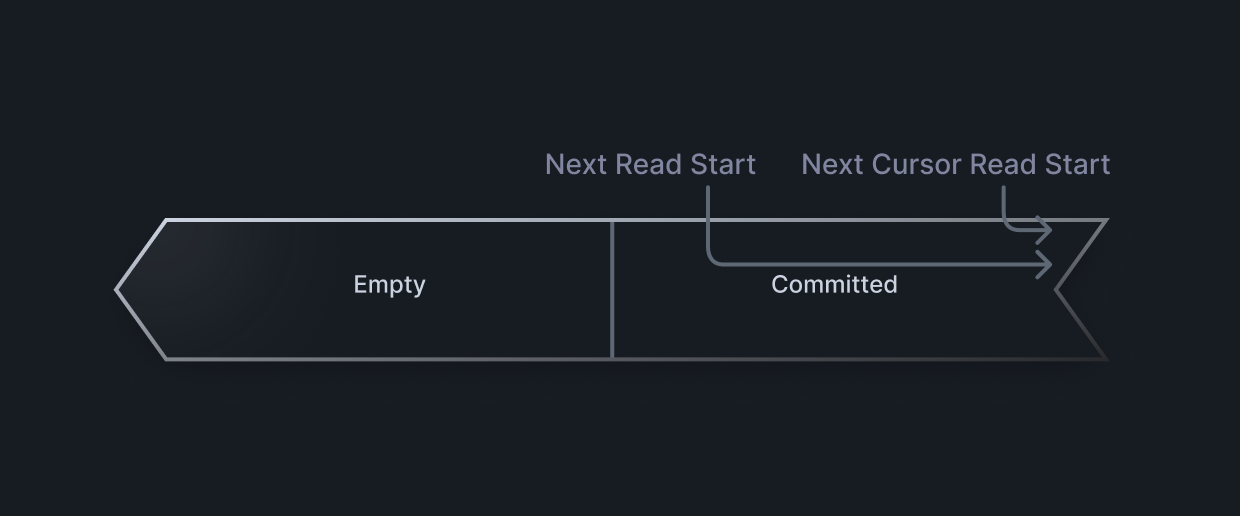

In the above diagram, multiple records have been read via the cursor, but the persisted next read start index has not been advanced. When the system is ready to advance it can do so via a builtin advance() API.

In the above diagram, multiple records have been read via the cursor, but the persisted next read start index has not been advanced. When the system is ready to advance it can do so via a builtin advance() API.

Design goals

Recall that the core value proposition of Capture is that telemetry is stored locally at the edge on millions of disparate mobile devices. Coupled with real-time control via the bitdrift control plane, telemetry is only sent to the bitdrift SaaS when a specific set of dynamically programmable events occur. Keeping telemetry local until it is requested both vastly reduces costs, allows debugging issues in ways that were previously impossible, and enables "time traveling": the ability to go back in time to see previous data that lead up to a more recent event. Thus was born the product requirement for a local storage subsystem with the following high level features and design goals:- Disk persistence: Mobile applications can be shutdown by the OS at any point, and also frequently lose network connectivity. Thus, RAM only storage is not sufficient if telemetry retrieval is to be reliable. Persisting telemetry data to disk is required for normal operation.

- Pre-allocated and fixed RAM and disk usage: It is critical that both RAM and disk usage are firmly bounded, knowable in advance, and configurable by the application developer. This allows developers to budget for telemetry storage, and potentially have differing budgets based on the underlying device (e.g., high-end devices with more RAM and larger disk and less prone to pressure might have larger budgets). Allocations occur upfront, allowing the Capture SDK to gracefully fail if resources cannot be acquired.

- Concurrent multiple producer and single consumer (MPSC) interface: Modern applications are multi-threaded. The storage subsystem must support many threads writing data concurrently, along with a concurrent reader that may be flushing data to the SaaS if trigger conditions are satisfied.

- Variable record size: Telemetry records are inherently variable in size. The storage subsystem must support efficient packing of variable size data.

- Cursor based consumption: If and when telemetry data is flushed to the SaaS, batches of data must be sent as a block for efficiency. In order to handle retries over unreliable networks, the storage subsystem must support a read cursor which allows many records to be read without the read status being “committed” to disk. If the upload fails or the application restarts the uncommitted reads can be retried later. When an upload ACK is received the read cursor can be advanced and committed.

- Resilience to disk corruption: Disk corruption is a fact of life, either due to partial writes or other factors. The storage subsystem must be resilient to this reality, and optimally be able to recover from some types of corruption without having to clear the entire buffer and lose everything it contains.

- High performance: Ultimately, the goal of Capture is to make outputting local telemetry “free.” In order to satisfy this illusion we would like the buffer implementation to have as low overhead as possible. This means limiting heap allocations, minimizing locking (in particular no locks should be held during memory copies, which can be concurrent), and in general performing as few copies as possible.

Low-level implementation details

Introduction to ring buffers

In computer science, a ring buffer (or circular buffer) is defined by Wikipedia as: a data structure that uses a single, fixed-size buffer as if it were connected end-to-end. Ring buffers are pervasive in low-level systems, both software and hardware, primarily because they do not allocate after initialization and thus have consistent performance characteristics. Further, ring buffers (and more generally streaming data systems) can be categorized as:- Single producer / single consumer (SPSC): this means that there is a single entity producing data and a single entity consuming data.

- Multiple producer / single consumer (MPSC): this means that there are multiple entities producing data and a single entity consuming data.

- Multiple producer / multiple consumer (MPMC): this means that there are multiple entities producing data and multiple entities consuming data.

- The implementation is RAM only.

- Ring buffer elements are required to be fixed size. (Of course a fixed size ring buffer entry could store a pointer to heap allocated variable size memory, but this would defeat our requirement of minimizing heap allocations and preallocating utilized resources, as well as make disk persistence difficult or impossible.)

The ring buffer

- Multiple application threads concurrently write telemetry data into the ring buffer.

- The first stage of the ring buffer is held entirely in volatile RAM and is multiple producer / single consumer (MPSC). RAM is used for speed. We would like data to be transferred into the first stage buffer as fast as possible so that application threads do not block waiting for disk writes (even when using cached disk IO the OS can decide to block writes while page cache is written back to disk). While this does mean that there is the chance of telemetry being lost if the volatile buffer does not get a chance to drain, we feel the performance gains are a reasonable tradeoff for most applications.

- The second stage of the ring buffer is stored on non-volatile disk for long term persistence. This portion of the buffer is single producer / single consumer (SPSC). A flush thread reads from the volatile MPSC buffer and writes into the non-volatile disk buffer.

- The combination of the volatile MPSC buffer and the non-volatile SPSC buffer is known as the “aggregate” MPSC buffer.

- A single consumer reads from the aggregate buffer when flushing or streaming telemetry to the bitdrift SaaS.

Memory mapped buffer design

In order to share as much code as possible between the RAM buffer and the disk buffer, a decision was made to implement the disk buffer in terms of memory mapped files. This means that the entirety of the disk buffer is mapped into the virtual memory space of the application (the almost complete rollout of 64-bit for modern applications removes any concerns about address space limitations, though this design means that any remaining 32-bit use cases have inherent limitations on disk buffer size). In general, memory mapped files allow Capture to rely on the OS for performance optimization of the disk access. The page cache is utilized for reads and writes with the OS performing cache fills and flushes as needed. Given that reads and writes tend to happen sequentially (as opposed to random access) for the Capture use case, the cache prefetch and writeback mechanisms that the OS employs are likely to be efficient.

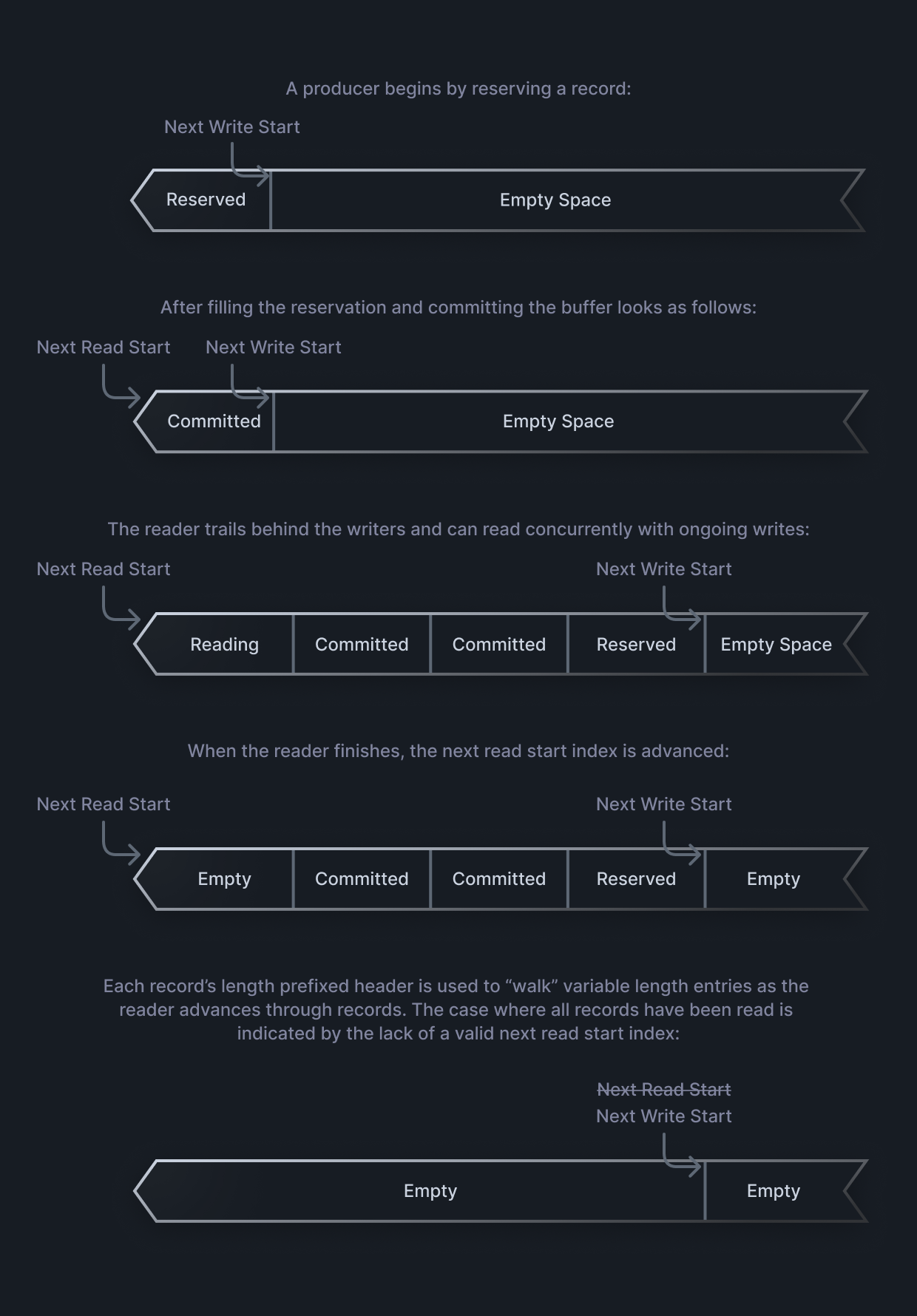

An important design point is that no locks are held between reserve() and commit(). Thus, multiple reservations can occur in parallel, data can be written in parallel, and then the records committed (potentially out of order from the original reservation order). The API for reading is similar:record = producer.reserve(size) … producer.commit()

The start_read() API returns the record memory without copying the record. The caller can inspect the record and decide whether or not to copy it somewhere else (for example a network payload buffer). The Capture SDK uses flatbuffers as the storage format for records. This has the very useful property that record data can be read directly from memory without any intermediate copying. Similar to writing, no locks are held between the start_read() and finish_read() calls. This means that writing can still happen in parallel to reading. (The buffer itself is not lockless. Locks are held during the start/finish calls so that various indexes can be checked and manipulated atomically.)record = consumer.start_read() … consumer.finish_read()

Basic buffer operation

The basic operation of the buffer works at a high level as follows:

The special case of wrapping

Using variable size records creates an interesting problem around wrapping the buffer (when we run out of space at the end and have to start overwriting the beginning). Because records are variable in size, there is no guarantee that the buffer will be filled all the way to the end. This is known as the “wrap gap” and the system has to explicitly remember the existence of this gap so it can differentiate between a lack of records after the last record that fits and having to look at the beginning of the buffer for more records to read.

Cursor reads

As discussed previously, one of the design goals of the ring buffer is to allow “cursor reads.” This means that multiple records can be read without actually advancing the underlying next read start index. This allows for records to be processed in batches in a fault tolerant manner. Only when a batch is fully acknowledged end-to-end is the underlying next read start index advanced and committed to disk. To this end, the buffer maintains an independent next cursor read start index that can be advanced independently of the persisted next restart start index.

Integrity checking

A design goal of the ring buffer is for it to be resilient to disk corruption, either due to premature shutdown or other underlying issues. Additionally, because applications may restart often, it’s also critical that validation of the buffer state does not take an unreasonable amount of time when working with a potentially sizable file. The non-volatile portion of the ring buffer uses the following techniques to satisfy these requirements:- The ring buffer control block (recall that this contains global indexes and other metadata and is a relatively small data structure) contains a built-in CRC32 of its contents. This CRC is recomputed every time any piece of data in the control block changes. On startup, the control block is validated to make sure the indexes are intact. This can be done very quickly and provides a strong indication of global corruption that would require the buffer to be zeroed out.

- Each record header maintains an independent CRC of the record contents. Recall that all record headers are length prefixed which allow for variable length records as well as inline “walking” of records as they are read. Before records are read from the non-volatile disk buffer, the CRC for the record is validated. This handles the case where an individual record is partially written or corrupted in some fashion, but the length prefix is still valid and the indexes can be reliably advanced. Note that if the length itself becomes corrupted (for example indexing off the end), the system must defend against this by potentially invalidating the entire buffer and starting from scratch.

- A substantial amount of care has been taken to use checked arithmetic when operating on indexes that properly validate lack of overflow. Corruption can lead to overflow and subtle out of bounds conditions that must be defended against. See below for more information on testing and validation of the system against these conditions.

Performance optimization

Various techniques have been used to make the ring buffer as fast as reasonably possible so that application developers can emit as much telemetry as they want without fear of impact on application performance:- As described previously, the buffer is concurrent across producers and consumers and does not hold any locks when both writing and reading records.

- All buffer RAM and disk is allocated upfront. Additionally, for a small number of use cases that do require heap allocations (in particular tracking active concurrent reservations), the buffer utilizes intrusive linked lists with a free list for unused entries. This means that at steady state there are zero heap allocations1.

- For conditions that require waiting and wakeup, explicit condition variables are utilized (as opposed to something like the Abseil conditional mutex) so that in common cases lock acquire and release is as low overhead as possible.

Implementation language

The ring buffer was some of the first Capture SDK code written. Like the rest of the initial prototype it was written in C++, due to the familiarity with the language by the initial authors. While the rest of the SDK was switched to Rust relatively early on as discussed in the SDK overview blog post, the ring buffer code remained C++ for quite some time. About a year ago we undertook the effort to port the C++ code to Rust. This was done for multiple reasons:- Binary size (the C++ code pulled in a non-trivial amount of extra dependencies from libraries like Abseil that were redundant to what was required in the Rust code).

- Tooling. Working with Rust is a substantially better developer experience than working with C++.

- Correctness. At least theoretically, the Rust implementation would be more provably correct than the C++ implementation.

Testing strategy

As is probably clear if the reader has made it this far, designing and implementing the ring buffer was not an easy task. It’s obviously critical that the buffer works correctly as it underpins the entire offering. This section covers how we test and validate the buffer.Unit testing

Not surprisingly, the first pass of testing utilizes unit testing with manually crafted sequences of record writes and reads. This provides a good base of coverage for the system and allows for rapid iteration. However, it’s extremely difficult (if not impossible) to manually generate test cases for all of the different permutations that may occur given variable length records.Fuzz testing

Given the impossibility of manual test case generation, we turned to fuzz testing. Fuzz testing uses profile/coverage driven automation to generate inputs for a program, and then based on program control flow will mutate the input to look for new program paths. Fuzz testing is an extremely powerful method to uncover correctness issues that may lead to security problems (for example in network protocols) or data corruption issues (for example in file systems). The initial C++ implementation used the following setup for fuzzing:- LLVM’s libFuzzer as the fuzz driver.

- Google’s libprotobuf-mutator to generate fuzz inputs. In general, fuzzing operates on a raw byte array as input. This is useful for certain cases such as network protocols, but for driving more complex procedural tests, it’s more useful to have structured input to drive each test case. The mutator library converts the fuzz generated byte array into a concrete Protocol Buffers message which is then used to drive the test case.

- Single producer / single consumer (SPSC) that operates over all three buffer types (volatile, non-volatile, and aggregate).

- Multiple producer / single consumer (MPSC) that operates only on the volatile buffer.

- A buffer corruption fuzzer that operates only on the MPSC aggregate buffer.

- cargo fuzz is used to drive fuzzing.

- The libfuzzer-sys crate is used to wrap libfuzzer.

- Instead of using Protobuf to drive test cases, we switched to using the arbitrary crate as this is natively supported by libfuzzer-sys, and serves an identical purpose as libprotobuf-mutator while allowing the use of native Rust structs.

Future work

While the Capture SDK and its underlying ring buffer implementation have been installed on millions of devices and we are reasonably sure of its reliability and correctness, there is always more to do. In the future we would like to:- Explore more rigorous performance testing and profiling across different devices.

- Handle buffer resizes without having to copy or lose existing data.

- Add hooks to allow for additional filtering and transformations that can occur when data is moved between the volatile and non-volatile buffers.

Footnotes

- The use of memory mapped files means that page faults can still occur if pages have to be mapped into the application’s virtual memory space. ↩