Capture SDK under the hood: Our approach to efficiency through dynamic configuration

In this blog post, we are excited to talk about the architectural and design principles that underpin the development of the Capture SDK. While the ideas behind the Capture SDK came from a general frustration around the limitations of mobile observability, the internal architecture details can be traced back to the work many of the initial bitdrift team members did on both Envoy and Envoy Mobile during their Lyft tenure.

Capture aims to provide mobile developers a way to have rich instrumentation of their apps without paying for the constant flow of telemetry. Telemetry is stored locally and only sent to the bitdrift SaaS when requested to do so via flexible workflow rules that give the developer the power to decide when and how often mobile applications should upload telemetry data. Through real-time dynamic reconfiguration, users are able to get new insights from their app in seconds instead of the weeks that a traditional release cycle might take.

While we wanted to make use of a shared core to implement the platform, we were also cognizant of some of the difficulties present in the mobile world. From our work on Envoy Mobile, we were painfully aware of how complex mobile networking can be thanks to VPNs, proxies and imperfect mobile networks. So much so that while a library like hyper recently hit 1.0 and is considered stable, we weren’t confident that it would live up to the standards we had for a mobile HTTP client.

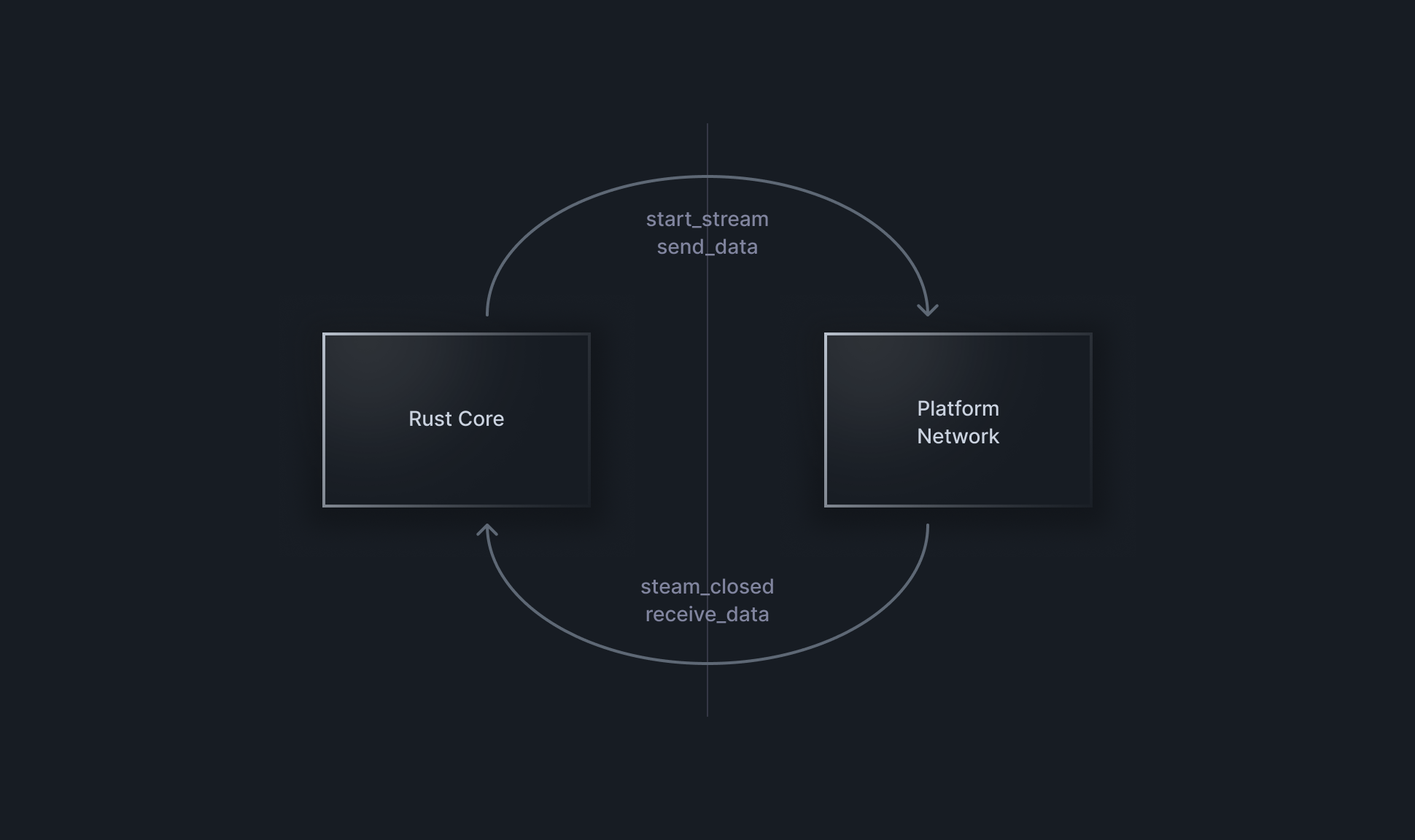

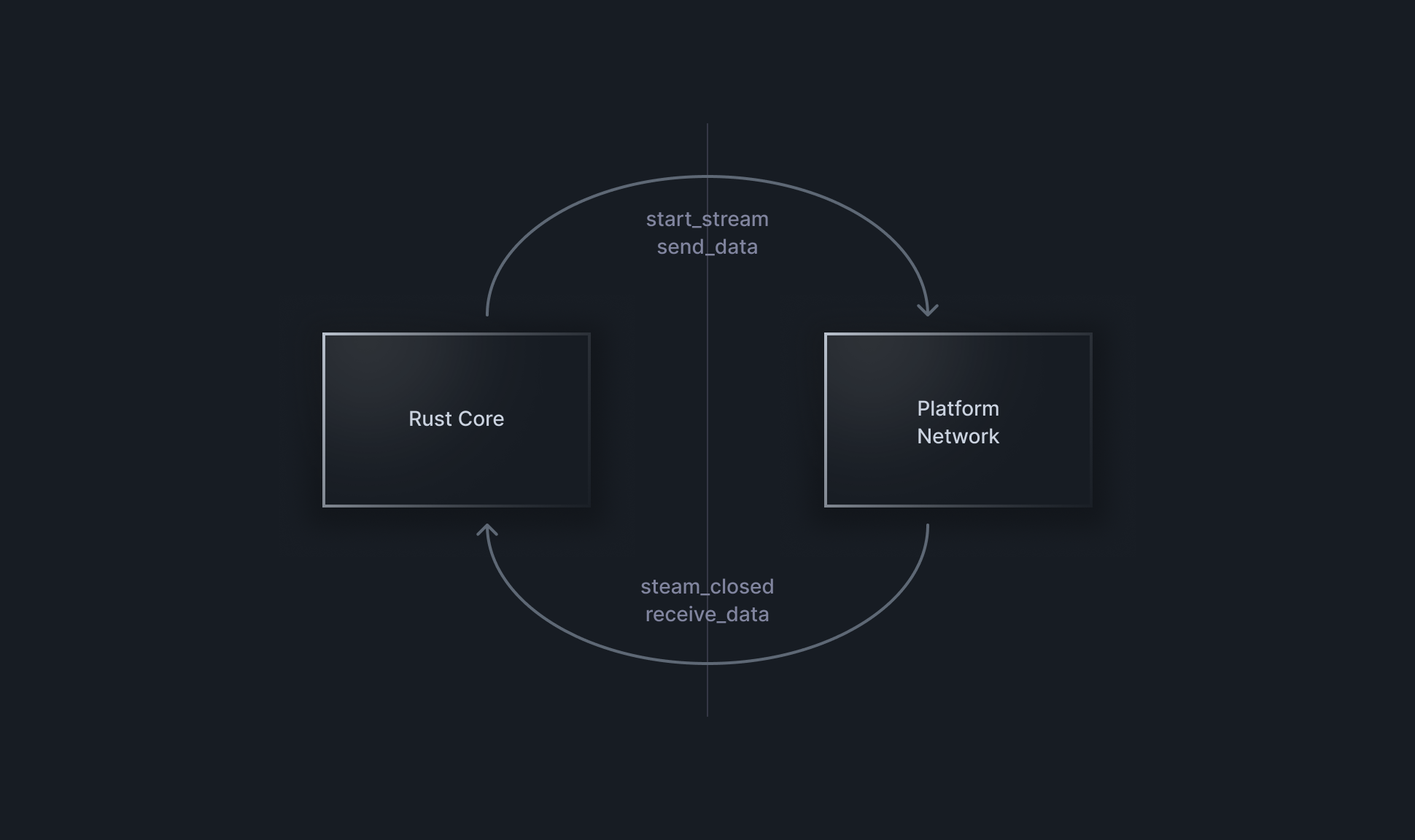

Due to this concern, we early on wanted to make sure that we were using a HTTP library that was thoroughly battle-tested on the platform to communicate with our backend. This led us to adding a network abstraction to our core library that would allow us to implement the actual networking layer with OkHttp for Android and URLSession for iOS. Implementing a bi-directional HTTP stream via UrlSession proved challenging, but in the end we ended up with a networking stack with the same stability as networking used by a platform-native app.

Expect a future blog post that talks about some of the challenges involved with implementing this!

While we wanted to make use of a shared core to implement the platform, we were also cognizant of some of the difficulties present in the mobile world. From our work on Envoy Mobile, we were painfully aware of how complex mobile networking can be thanks to VPNs, proxies and imperfect mobile networks. So much so that while a library like hyper recently hit 1.0 and is considered stable, we weren’t confident that it would live up to the standards we had for a mobile HTTP client.

Due to this concern, we early on wanted to make sure that we were using a HTTP library that was thoroughly battle-tested on the platform to communicate with our backend. This led us to adding a network abstraction to our core library that would allow us to implement the actual networking layer with OkHttp for Android and URLSession for iOS. Implementing a bi-directional HTTP stream via UrlSession proved challenging, but in the end we ended up with a networking stack with the same stability as networking used by a platform-native app.

Expect a future blog post that talks about some of the challenges involved with implementing this!

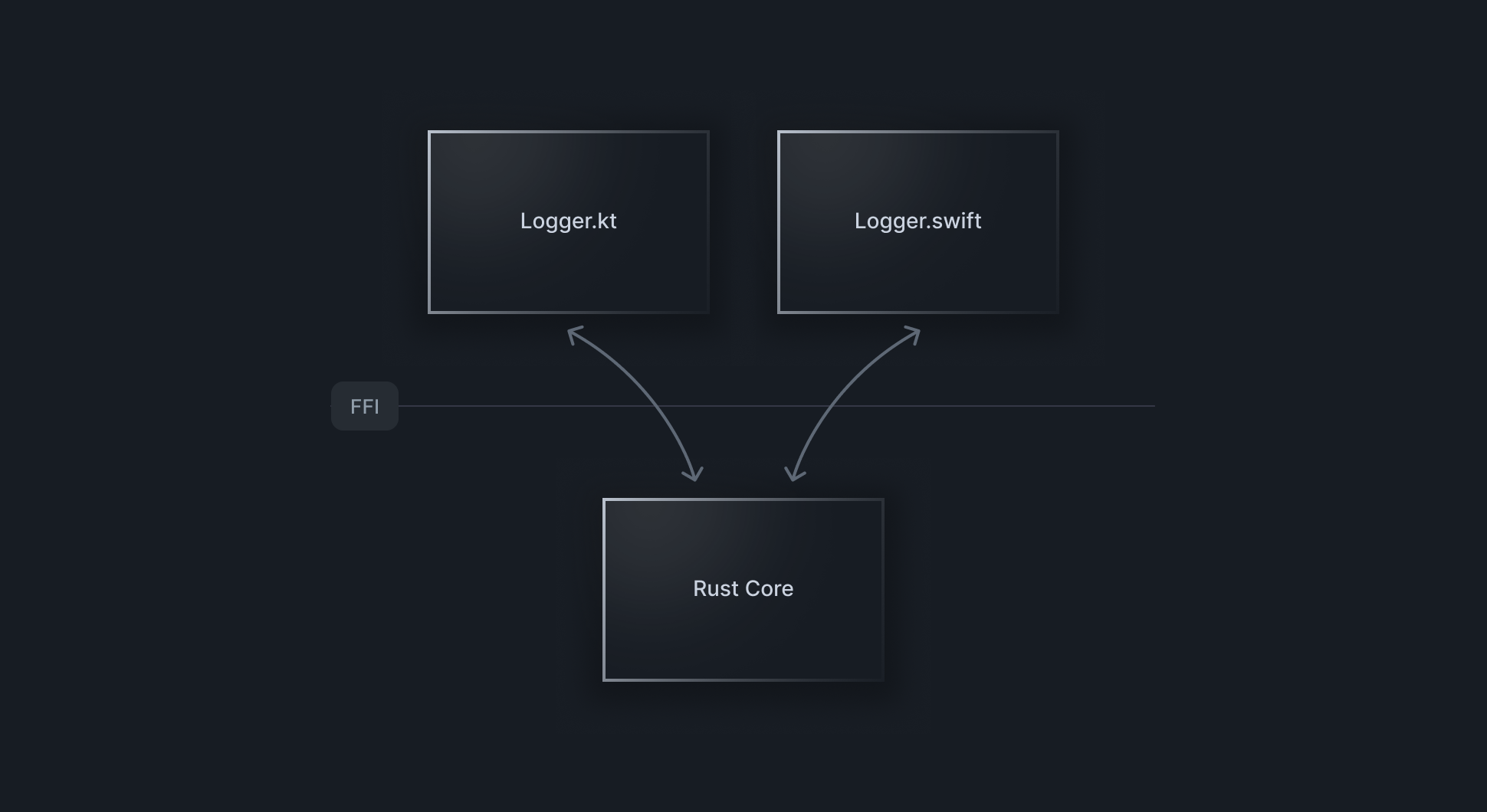

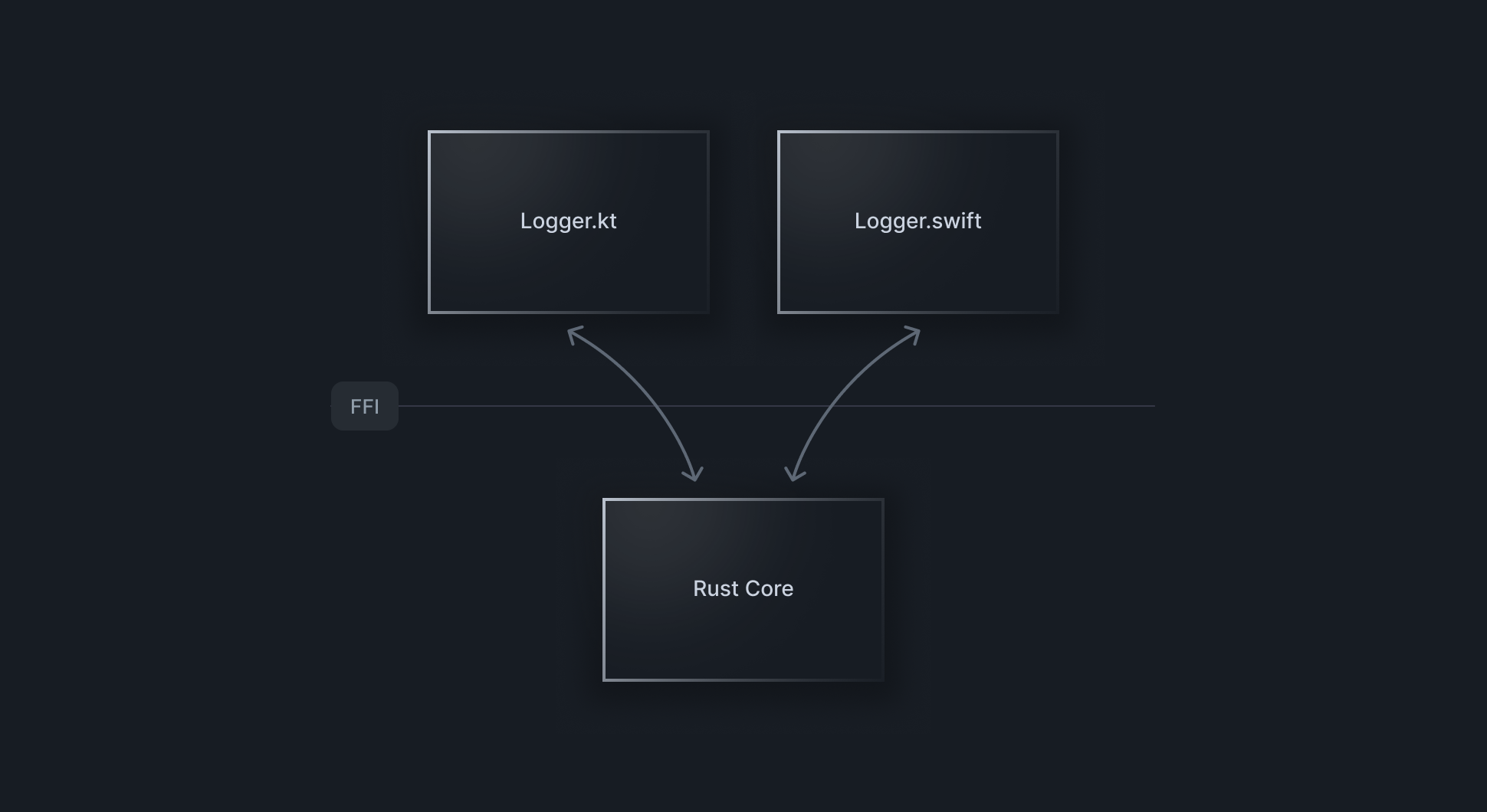

The above sections have talked about much of the internals used to manage the inbound flow of telemetry, but this is only as useful as the raw data being fed into the system. To provide deep integrations with each mobile platform, there is platform layer that serves multiple purposes:

The above sections have talked about much of the internals used to manage the inbound flow of telemetry, but this is only as useful as the raw data being fed into the system. To provide deep integrations with each mobile platform, there is platform layer that serves multiple purposes:

Some History

The Capture SDK was in part motivated by a frustration at the lack of high quality mobile observability tools available. This became obvious to us while we were attempting to monitor the Envoy Mobile rollout at Lyft, where the lack of observability had us flying blind until we started investing into better tooling. Envoy Mobile is a networking library for mobile that leverages a shared native core based on the Envoy network proxy, which allows it to provide a consistent implementation of a HTTP proxy shared between all supported platforms. With a shared core, the increasingly complex HTTP protocols can be implemented in the same code, ensuring consistent behavior and predictable results between platforms. When we started looking at implementing the observability platform that would underpin the Capture SDK, we were interested in many of these properties: we wanted to be able to build a flexible platform with the capability to support not only our initial vision of on-demand telemetry but also provide the building blocks for many more features to come. With our relatively small team, the idea of writing this code once and sharing it between platforms was very appealing: not only would we avoid differences in logic between supported platforms, but we’d also be primed to support many more platforms in the future without having to rewrite the library core.Choosing Rust

While we had some early efforts to build the platform in C++ due to our team’s significant C++ experience, we quickly realized that as a greenfield project we were in a great position to use whatever language we wanted. As we’d already started investing in Rust on the backend side of things, Rust emerged as an obvious candidate for the native core of the SDK. Not only was Rust becoming increasingly mature with a vast ecosystem of third party libraries, but the safety guarantees of Rust seemed ideal in a mobile environment where shipping a crash could be costly due to longer release cycles. It took us a couple of weeks to rewrite what we had in Rust, and we’ve been very happy with the choice: most of us were able to pick up the language without much difficulty and it has proved a much more productive choice of language than C++.Rust Core

With some historical context out of the way, let’s take a look at the actual implementation. The core of the library is an event loop orchestrated by the tokio crate. Using async Rust via tokio has allowed us to efficiently implement everything from networking and file operations to buffer processing through a consistent framework and run the vast majority of the work on a single thread. Given the number of async networking and file operations, an event loop makes for very effective resource usage: while one task is busy waiting for a file or networking operation, other tasks can proceed with other CPU bound work. The event loop is responsible for maintaining a persistent bi-directional stream with the bitdrift control plane, which is used to inform the control plane of the existence of the client and to receive the targeted configuration for the specific client. This configuration takes immediate effect, and configures other parts of the system. One of the components is the logging subsystem: most telemetry data is collected as log events which are written to the disk ring buffer via a series of in-memory buffers. The real-time configuration received from the server tells the SDK what log events to forward to the ring buffer, as well as specifying conditions for when the ring buffer data should be flushed to the SaaS. Re-using the persistent stream for data uploads allows us to apply compression to the data, which grows increasingly efficient during the duration of the stream as the compression library is able to recognize repeated patterns and improve its encoding. These components form the core of the SDK: an efficient, fixed resource usage log event buffer with flush rules that are configured via real-time APIs. Logging becomes “free” in most cases as the log events are just inspected according to the configured rules and written to a buffer; data is only uploaded when the SDK has been configured to do so. Thus, Capture allows logs to be written to the SDK without concern for the immediate cost of the log and provides users control over what they want to upload after the fact. Should the logs be uploaded this also ends up being very cheap: the logs are written as flatbuffers to the ring buffer, so uploading just means copying out the raw bytes within the buffer and dispatching it to the SaaS.Real-time APIs

One of the key learnings we carried over from creating and working with Envoy is the value of real-time APIs and dynamic reconfiguration. By making as much as possible reconfigurable in real-time, we can rely on our sophisticated control plane to make targeted decisions based on the connected clients and modify the configuration in use for clients. For example, our control plane is able to monitor the amount of data uploaded by clients and push out configuration changes to slow down or stop the flow of data uploads in real-time. Dynamic real-time configuration sets the stage for concepts similar to that of “global load balancing” that the Envoy xDS APIs allow: a centralized control plane that is able to ingest data about the system and make split-second decisions to modify the configuration sent out to the relatively simple data plane, allowing the fleet of mobile applications running the SDK to cooperate without ever having to talk to each other.Platform Native Networking

Platform Integrations

- Provide a configuration point for users to initialize and configure the Capture SDK

- Provide a number of out of the box integrations that automatically records telemetry of the system, using platform specific mechanisms

- Provide users a way to feed explicit telemetry into the system in the form of log events

- Provide platform specific features like session replay and resource utilization, which is yet another application of telemetry recorded as log events

Conclusion

With the architecture that we’ve outlined above, we’ve been able to see great success with developers being able to debug issues at a much faster rate than before. Thanks to dynamic real-time configuration delivery, users are able to capture sessions that exhibit complex series of events within seconds, without having to worry about the cost of telemetry. The performance overhead of the SDK running on mobile devices is very “boring” thanks to the fixed ring buffers employed, minimizing the overall impact on the rest of the app. If the above sounds like a lot: don’t worry! We intended to continue publishing blogs on many of the subsystems mentioned above, as they are complex enough to warrant an in-depth explanation. A more comprehensive discussion of the architecture can be found at https://docs.bitdrift.io/sdk/performance-measurements, which explains the performance characteristics of the SDK.Author

Snow Pettersen