Announcing Pulse proxy

Following the announcement of source availability of the Capture SDK, we are thrilled to additionally announce the availability of Pulse, an observability proxy built for very large metrics infrastructures. Read on for an overview of Pulse, a brief history of its creation, and how it fits into the larger server-side observability ecosystem.

Pulse is an observability proxy built for very large metrics infrastructures. It derives ideas from previous projects in this space including statsite and statsrelay, while offering a modern API driven configuration and hitless configuration reloading similar to that offered by Envoy.

Metrics you say? Hasn’t the observability world moved on to structured logs as the preferred source of all data? While it’s true that this is the trend in the industry, and in fact the approach taken by Capture, our mobile observability product, it’s also true that good ol’ metrics are still the backbone of the observability practice at many, many very large organizations.

While OTel Collector, Fluent Bit, and Vector are all excellent projects that offer some level of metrics support, they are lacking when it comes to scaling very large metrics infrastructures, primarily around:

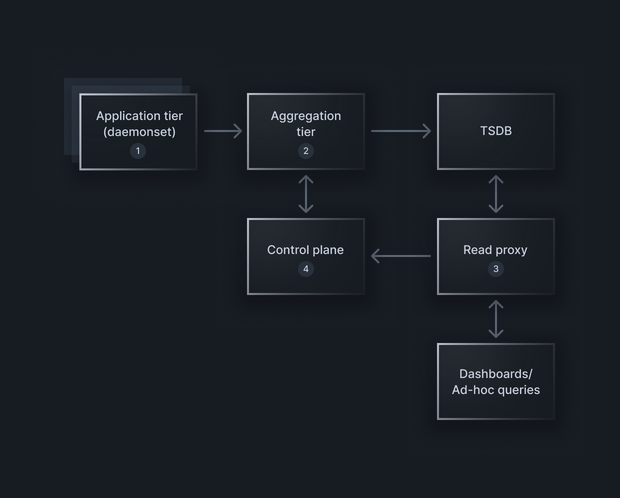

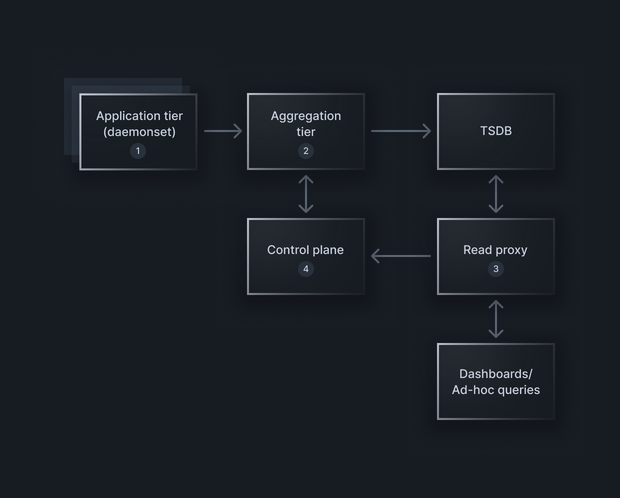

As an example of how Pulse might be used, a simplified version of Lyft’s metrics infrastructure is shown in the above diagram.

As an example of how Pulse might be used, a simplified version of Lyft’s metrics infrastructure is shown in the above diagram.

- Aggregation: E.g., dropping a pod label to derive service level aggregate metrics. Aggregation for Prometheus metrics is especially tricky, as aggregating absolute counters (counters that monotonically increase vs counters that only report delta since the previous report) across many sources is non-trivial.

- Clustering: Consistent hashing and routing at the aggregation tier, primarily in service of more sophisticated aggregation approaches.

- Automated blocking/elision based on control-plane driven configuration: Controlling metrics growth and spend is an important goal at many organizations. Automated systems to deploy blocking and metric point elision are an important strategy to reduce overall points per second that are ultimately being sent to the TSDB vendor.

A brief history of Pulse

At this point you might be asking yourself: “What is bitdrift doing releasing a server-side metrics proxy? I thought bitdrift is a mobile observability company?” A brief history of how Pulse came about follows. As we described during our public launch, bitdrift spun out of Lyft. Prior to the spinout the bitdrift team was responsible for two different pieces of technology within Lyft:- The mobile observability product that is now known as Capture.

- A set of technologies related to managing Lyft’s very large metrics infrastructure, focusing on overall performance, reliability, and cost control. This set of technologies together is called “MME” (metrics management engine).

A control plane driven approach to metrics

- A Pulse proxy based daemonset receives metrics from applications. This first layer does initial transformation, batching, cardinality limiting, etc. prior to sending the metrics off to the aggregation tier.

- The aggregation tier receives all metrics, and uses consistent hashing to make sure the metrics are ultimately routed to the correct aggregation node for processing. Once on the right node, several different things happen:

- High level aggregation occurs (e.g., creating service level metrics from pod metrics)

- Samples of observed metrics are sent to the control plane

- The control plane sends lists of metrics to be explicitly blocked (more on this below)

- Various buffering and retry mechanisms on the way towards ultimately sending the data on to the TSDB

- A read proxy (not included as part of Pulse) sits between all users of metrics (dashboards and ad-hoc queries) and intercepts all metric queries. It sends the queries to the control plane so that the control plane can be aware of what metrics are actually read, either manually or via alert queries.

- The control plane (also not included as part of Pulse, but communicated with via well specified APIs) takes the write-side samples from the aggregation tier and merges them with the read proxy data in order to determine which metrics are actually being used. The control plane then dynamically creates blocklists based on policy to automatically block metrics that are written but never read, which in very large metrics infrastructures is often a vast majority of all metrics. The blocklists are served to the Pulse proxies which then perform inline blocking and elision of the metrics stream, thus resulting in significant reduction of overall points per second sent on to the TSDB.